I recently wrote a post for the company I currently work for around the joys of doing TDD.

You can check it out here.

What is your current approach to testing?

How can you spend the little time you have on the most important areas?

I thought I’d share some thoughts around where I see the optimal areas to invest your test effort.

I got to thinking last night, and when I was asleep.

We are putting too much effort into our UI, UA and system tests.

We are writing to many of them, thus we’re creating a top heavy test structure that will sooner or later topple.

These tests have their sweet spot, but they are slow, fragile and time consuming to write.

We should have a small handful for each user story to provide some UA, and the rest should be without the UI and database (the slow and fragile bits).

We need to get our mind sets lower down the test triangle.

I’ll try and explain why we should be doing less Manual tests, followed by GUI tests, followed by UA tests, followed by integration tests, followed by Unit tests.

Try not to test the UI with the lower architectural layers included in the tests.

UI tests should have the lower layers mocked and / or stubbed.

Check out Dummy vs Fake vs Stub vs Mock

Full end to end system tests are not required to validate UI field constraints.

Dependency injection really helps us here.

When you are explicitly testing the upper levels of the test triangle, the lower / immediate lower layers are implicitly being tested.

So you might think, cool, if we invest in the upper layers, we implicitly cover the lower layers.

That’s right, but the disadvantages of the higher level tests outweigh the advantages.

UI tests and especially ones that go from end to end, should be avoided, or very few in number,

as they are fragile and incur high maintenance costs.

If we create to many of these, confidence in their value diminishes.

Read on and you’ll find out why.

Lets look at cost vs value to the business.

Some tests cost a lot to create and modify.

Some cost little to create and modify.

Some yield high value.

Some yield low value.

We only have so much time for testing,

so lets use it in the areas that provide the greatest value to the business.

Greatest value of course, will be measured differently for each feature.

There is no stock standard answer here, only guidelines.

What we’re aiming for is to spend the minimum effort (cost) and get the maximum benefit (value).

Not the other way around…

With the following set of scales, we’ve spent to much in the wrong areas, yielding suboptimal value.

It’s worth the effort to get under the UI layer and do the required setup incl mocking the layers below.

It’s also not to hard to get around the likes of the HttpContext hierarchy of classes (HttpRequest, HttpResponse, and so on) encountered in ASP.NET Web Forms and MVC.

Beware

- the higher level tests get progressively more expensive to create and maintain.

- They are slower to run, which means they don’t run as part of CI, but maybe the nightly build.

Which means there is more latency in the development cycle.

Developers are less likely to run them manually. - When they break, it takes longer to locate the fault, as you have all the layers below to go through.

Unreliable tests are a major cause for teams ignoring or losing confidence in automated tests.

UI, Acceptance, followed by integration tests are usually the culprits for causing this.

Once confidence is lost, the value initially invested in the automated tests is significantly reduced.

Fixing failing tests and resolving issues associated with brittle tests should be a priority to remove false positives.

Planning the test effort

This is usually the first step we do when starting work on a user story,

or any new feature.

We usually create a set of Test Conditions (Given/When/Then)

| Given | When | Then |

|---|---|---|

| There are no items in the shopping cart | Customer clicks “Purchase” button for a book which is in stock | 1 x book is added to shopping cart. Book is held – preventing selling it twice. |

| “ | Customer clicks “Purchase” button for a book which is not in stock | Dialog with “Out of stock” message is displayed and offering customer option of putting book on back order. |

for Product Backlog items where there are enough use cases for it to be worth doing.

Where we don’t create Test Conditions, we have a Test Condition workshop.

In the workshop we look at the What, How, Who and Why in that order.

The test quadrant (pictured below) assists us in this.

In the workshop, we write the previously recorded Acceptance Criteria on a board (the What) and discuss the most effective way to verify that the conditions are meet (the How)

With the how we look at the test triangle and the test quadrant and decide where our time is most effectively spent.

Test condition workshop

With the test condition workshop,

when we start on a user story (generally a feature in the sprint backlog),

we plan where we are going to spend our test resource.

Think about What, and sometimes Who, but not How.

The How comes last.

Unit tests are the developers bread and butter.

They are cheap to create and modify,

and consistently yield not only good value to the developers,

but implicitly good value to most / all other areas.

This is why they sit at the bottom of the test triangle.

This is why TDD is as strong as it is today.

The hierarchy of criteria that we use to help us

- Release Criteria

Ultimately controlled by the Product Owner or release manager. - Acceptance Criteria

Also owned by the Product Owner.

Attached to each user story, or more correctly… product backlog item.

The Development team must meet these in order to fulfill the Definition of Done. - Test Conditions

When executable, confirm the development team have satisfied the requirements of the product backlog item.

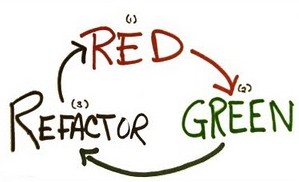

Write your tests first

TDD is not about testing, it’s about creating better designs.

This forces us to design better software. “Testable”, “Modular”, separating concerns, Single responsibility principle.

This forces us down the path of SOLID Principles.

- Write a unit test

Run it and watch it fail (because the production code is not yet written) - Write just enough production code to make the test pass

- Re-run the test and watch it pass

This podcast around TDD has lots of good info.

Continuous Integration

Realise the importance of setting up CI and nightly builds.

The benefit of having your unit (fast running) tests automatically executed regularly are great.

You get rapid feedback, which is crucial to an agile team completing features on time.

Tests that are not being run regularly have the risk that they may be failing.

The sooner you find a failing test, the easier it is to fix the code.

The longer it’s left unattended, the more technical debt you accrue and the more effort is required to hunt down the fault.

Make the effort to get your tests running on each commit or push.

Nightly Builds

The slower running tests (that’s all the automated tests above unit tests on the triangle), need to be run as part of a nightly build.

We can’t have these running as part of the CI because they are just too slow.

If something gets in the way of a developers work flow, it won’t get done.

Pair Review

Don’t forget to pair review all code written.

In my current position we’ve been requesting reviews verbally and responding with emails, comments on paper.

This is not ideal and we’re currently evaluating review software, of which there are many offerings.