Risks

- Passwords and other secrets for things like data-stores, syslog servers, monitoring services, email accounts and so on can be useful to an attacker to compromise data-stores, obtain further secrets from email accounts, file servers, system logs, services being monitored, etc, and may even provide credentials to continue moving through the network compromising other machines.

- Passwords and/or their hashes travelling over the network.

Data-store Compromise

The reason I’ve tagged this as moderate is because if you take the countermeasures, it doesn’t have to be a disaster.

There are many examples of this happening on a daily basis to millions of users. The Ashley Madison debacle is a good example. Ashley Madison’s entire business relied on its commitment to keep its clients (37 million of them) data secret, provide discretion and anonymity.

“Before the breach, the company boasted about airtight data security but ironically, still proudly displays a graphic with the phrase “trusted security award” on its homepage”

“We worked hard to make a fully undetectable attack, then got in and found nothing to bypass…. Nobody was watching. No security. Only thing was segmented network. You could use Pass1234 from the internet to VPN to root on all servers.”

“Any CEO who isn’t vigilantly protecting his or her company’s assets with systems designed to track user behavior and identify malicious activity is acting negligently and putting the entire organization at risk. And as we’ve seen in the case of Ashley Madison, leadership all the way up to the CEO may very well be forced out when security isn’t prioritized as a core tenet of an organization.”

Dark Reading

Other notable data-store compromises were LinkedIn with 6.5 million user accounts compromised and 95% of the users passwords cracked in days. Why so fast? Because they used simple hashing, specifically SHA-1. EBay with 145 million active buyers. Many others coming to light regularly.

Are you using well salted and quality strong key derivation functions (KDFs) for all of your sensitive data? Are you making sure you are notifying your customers about using high quality passwords? Are you informing them what a high quality password is? Consider checking new user credentials against a list of the most frequently used and insecure passwords collected.

Countermeasures

Secure password management within applications is a case of doing what you can, often relying on obscurity and leaning on other layers of defence to make it harder for compromise. Like many of the layers already discussed in my book.

Find out how secret the data that is supposed to be secret that is being sent over the network actually is and consider your internal network just as malicious as the internet. Then you will be starting to get the idea of what defence in depth is about. That way when one defence breaks down, you will still be in good standing.

You may read in many places that having data-store passwords and other types of secrets in configuration files in clear text is an insecurity that must be addressed. Then when it comes to mitigation, there seems to be a few techniques for helping, but most of them are based around obscuring the secret rather than securing it. Essentially just making discovery a little more inconvenient like using an alternative port to SSH to other than the default of 22. Maybe surprisingly though, obscurity does significantly reduce the number of opportunistic type attacks from bots and script kiddies.

Store Configuration in Configuration files

Do not hard code passwords in source files for all developers to see. Doing so also means the code has to be patched when services are breached. At the very least, store them in configuration files and use different configuration files for different deployments and consider keeping them out of source control.

Here are some examples using the node-config module.

node-config

is a fully featured, well maintained configuration package that I have used on a good number of projects.

To install: From the command line within the root directory of your NodeJS application, run:

npm install node-config --save

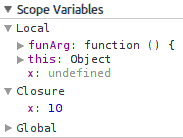

Now you are ready to start using node-config. An example of the relevant section of an app.js file may look like the following:

// Due to bug in node-config the if statement is required before config is required

// https://github.com/lorenwest/node-config/issues/202

if (process.env.NODE_ENV === 'production')

process.env.NODE_CONFIG_DIR = path.join(__dirname, 'config');

Where ever you use node-config, in your routes for example:

var config = require('config');

var nodemailer = require('nodemailer');

var enquiriesEmail = config.enquiries.email;

// Setting up email transport.

var transporter = nodemailer.createTransport({

service: config.enquiries.service,

auth: {

user: config.enquiries.user,

pass: config.enquiries.pass // App specific password.

}

});

A good collection of different formats can be used for the config files: .json, .json5, .hjson, .yaml, .js, .coffee, .cson, .properties, .toml

There is a specific file loading order which you specify by file naming convention, which provides a lot of flexibility and which caters for:

- Having multiple instances of the same application running on the same machine

- The use of short and full host names to mitigate machine naming collisions

- The type of deployment. This can be anything you set the

$NODE_ENV environment variable to for example: development, production, staging, whatever.

- Using and creating config files which stay out of source control. These config files have a prefix of

local. These files are to be managed by external configuration management tools, build scripts, etc. Thus providing even more flexibility about where your sensitive configuration values come from.

The config files for the required attributes used above may take the following directory structure:

OurApp/

|

+-- config/

| |

| +-- default.js (usually has the most in it)

| |

| +-- devbox1-development.js

| |

| +-- devbox2-development.js

| |

| +-- stagingbox-staging.js

| |

| +-- prodbox-production.js

| |

| +-- local.js (creted by build)

|

+-- routes

| |

| +-- home.js

| |

| +-- ...

|

+-- app.js (entry point)

|

+-- ...

The contents of the above example configuration files may look like the following:

module.exports = {

enquiries: {

// Supported services:

// https://github.com/andris9/nodemailer-wellknown#supported-services

// supported-services actually use the best security settings by default.

// I tested this with a wire capture, because it is always the most fool proof way.

service: 'FastMail',

email: 'yourusername@fastmail.com',

user: 'yourusername',

pass: null

}

// Lots of other settings.

// ...

}

module.exports = {

enquiries: {

// Test password for developer on devbox1

pass: 'D[6F9,4fM6?%2ULnirPVTk#Q*7Z+5n' // App specific password.

}

}

module.exports = {

enquiries: {

// Test password for developer on devbox2

pass: 'eUoxK=S9<,`@m0T1=^(EZ#61^5H;.H' // App specific password.

}

}

{

}

{

}

// Build creates this file.

module.exports = {

enquiries: {

// Password created by the build.

pass: '10lQu$4YC&x~)}lUF>3pm]Tk>@+{N]' // App specific password.

}

}

node-config also:

- Provides command line overrides, thus allowing you to override configuration values at application start from command

- Allows for the overriding of environment variables with custom environment variables from a

custom-environment-variables.json file

Encrypting/decrypting credentials in code may provide some obscurity, but not much more than that.

There are different answers for different platforms. None of which provide complete security, if there is such a thing, but instead focusing on different levels of obscurity.

Windows

Store database credentials as a Local Security Authority (LSA) secret and create a DSN with the stored credential. Use a SqlServer connection string with Trusted_Connection=yes

The hashed credentials are stored in the SAM file and the registry. If an attacker has physical access to the storage, they can easily copy the hashes if the machine is not running or can be shut-down. The hashes can be sniffed from the wire in transit. The hashes can be pulled from the running machines memory (specifically the Local Security Authority Subsystem Service (LSASS.exe)) using tools such as Mimikatz, WCE, hashdump or fgdump. An attacker generally only needs the hash. Trusted tools like psexec take care of this for us. All discussed in my “0wn1ng The Web” presentation.

Encrypt Sections of a web, executable, machine-level, application-level configuration files with aspnet_regiis.exe with the -pe option and name of the configuration element to encrypt and the configuration provider you want to use. Either DataProtectionConfigurationProvider (uses DPAPI) or RSAProtectedConfigurationProvider (uses RSA). the -pd switch is used to decrypt or programatically:

string connStr = ConfigurationManager.ConnectionString["MyDbConn1"].ToString();

Of course there is a problem with this also. DPAPI uses LSASS, which again an attacker can extract the hash from its memory. If the RSAProtectedConfigurationProvider has been used, a key container is required. Mimikatz will force an export from the key container to a .pvk file. Which can then be read using OpenSSL or tools from the Mono.Security assembly.

I have looked at a few other ways using PSCredential and SecureString. They all seem to rely on DPAPI which as mentioned uses LSASS which is open for exploitation.

Credential Guard and Device Guard leverage virtualisation-based security. By the look of it still using LSASS. Bromium have partnered with Microsoft and coined it Micro-virtualization. The idea is that every user task is isolated into its own micro-VM. There seems to be some confusion as to how this is any better. Tasks still need to communicate outside of their VM, so what is to stop malicious code doing the same? I have seen lots of questions but no compelling answers yet. Credential Guard must run on physical hardware directly. Can not run on virtual machines. This alone rules out many

deployments.

“Bromium vSentry transforms information and infrastructure protection with a revolutionary new architecture that isolates and defeats advanced threats targeting the endpoint through web, email and documents”

“vSentry protects desktops without requiring patches or updates, defeating and automatically discarding all known and unknown malware, and eliminating the need for costly remediation.”

This is marketing talk. Please don’t take this literally.

“vSentry empowers users to access whatever information they need from any network, application or website, without risk to the enterprise”

“Traditional security solutions rely on detection and often fail to block targeted attacks which use unknown “zero day” exploits. Bromium uses hardware enforced isolation to stop even “undetectable” attacks without disrupting the user.”

Bromium

“With Bromium micro-virtualization, we now have an answer: A desktop that is utterly secure and

a joy to use”

Bromium

These seem like bold claims.

Also worth considering is that Microsofts new virtualization-based security also relies on UEFI Secure Boot, which has been proven insecure.

Linux

Containers also help to provide some form of isolation. Allowing you to only have the user accounts to do what is necessary for the application.

I usually use a deployment tool that also changes the permissions and ownership of the files involved with the running web application to a single system user, so unprivileged users can not access the web applications files at all. The deployment script is executed over SSH in a remote shell. Only specific commands on the server are allowed to run and a very limited set of users have any sort of access to the machine. If you are using Linux Containers then you can reduce this even more if it is not already.

One of the beauties of GNU/Linux is that you can have as much or little security as you decide. No one has made that decision for you already and locked you out of the source. You are not feed lies like all of the closed source OS vendors trying to pimp their latest money spinning product. GNU/Linux is a dirty little secrete that requires no marketing hype. It just provides complete control if you want it. If you do not know what you want, then someone else will probably take that control from you. It is just a matter of time if it hasn’t happened already.

Least Privilege

An application should have the least privileges possible in order to carry out what it needs to do. Consider creating accounts for each trust distinction. For example where you only need to read from a data store, then create that connection with a users credentials that is only allowed to read, and so on for other privileges. This way the attack surface is minimised. Adhering to the principle of least privilege. Also consider removing table access completely from the application and only provide permissions to the application to run stored queries. This way if/when an attacker is able to

compromise the machine and retrieve the password for an action on the data-store, they will not be able to do a lot anyway.

Location

Put your services like data-stores on network segments that are as sheltered as possible and only contain similar services.

Maintain as few user accounts on the servers in question as possible and with the least privileges as possible.

Data-store Compromise

As part of your defence in depth strategy, you should expect that your data-store is going to get stolen, but hope that it does not. What assets within the data-store are sensitive? How are you going to stop an attacker that has gained access to the data-store from making sense of the sensitive data?

As part of developing the application that uses the data-store, a strategy also needs to be developed and implemented to carry on business as usual when this happens. For example, when your detection mechanisms realise that someone unauthorised has been on the machine(s) that host your data-store, as well as the usual alerts being fired off to the people that are going to investigate and audit, your application should take some automatic measures like:

- All following logins should be instructed to change passwords

If you follow the recommendations below, data-store theft will be an inconvenience, but not a disaster.

Consider what sensitive information you really need to store. Consider using the following key derivation functions (KDFs) for all sensitive data. Not just passwords. Also continue to remind your customers to always use unique passwords that are made up of alphanumeric, upper-case, lower-case and special characters. It is also worth considering pushing the use of high quality password vaults. Do not limit password lengths. Encourage long passwords.

PBKDF2, bcrypt and scrypt are KDFs that are designed to be slow. Used in a process commonly known as key stretching. The process of key stretching in terms of how long it takes can be tuned by increasing or decreasing the number of cycles used. Often 1000 cycles or more for passwords. “The function used to protect stored credentials should balance attacker and defender verification. The defender needs an acceptable response time for verification of users’ credentials during peak use. However, the time required to map <credential> -> <protected form> must remain beyond threats’ hardware (GPU, FPGA) and technique (dictionary-based, brute force, etc) capabilities.”

OWASP Password Storage

PBKDF2, bcrypt and the newer scrypt, apply a Pseudorandom Function (PRF) such as a crypto-graphic hash, cipher or HMAC to the data being received along with a unique salt. The salt should be stored with the hashed data.

Do not use MD5, SHA-1 or the SHA-2 family of cryptographic one-way hashing functions by themselves for cryptographic purposes like hashing your sensitive data. In-fact do not use hashing functions at all for this unless they are leveraged with one of the mentioned KDFs. Why? Because the hashing speed can not be slowed as hardware continues to get faster. Many organisations that have had their data-stores stolen and continue to on a weekly basis could avoid their secrets being compromised simply by using a decent KDF with salt and a decent number of iterations. “Using four AMD Radeon HD6990 graphics cards, I am able to make about 15.5 billion guesses per second using the SHA-1 algorithm.”

Per Thorsheim

In saying that, PBKDF2 can use MD5, SHA-1 and the SHA-2 family of hashing functions. Bcrypt uses the Blowfish (more specifically the Eksblowfish) cipher. Scrypt does not have user replaceable parts like PBKDF2. The PRF can not be changed from SHA-256 to something else.

Which KDF To Use?

This depends on many considerations. I am not going to tell you which is best, because there is no best. Which to use depends on many things. You are going to have to gain understanding into at least all three KDFs. PBKDF2 is the oldest so it is the most battle tested, but there has also been lessons learnt from it that have been taken to the latter two. The next oldest is bcrypt which uses the Eksblowfish cipher which was designed specifically for bcrypt from the blowfish cipher, to be very slow to initiate thus boosting protection against dictionary attacks which were often run on custom Application-specific Integrated Circuits (ASICs) with low gate counts, often found in GPUs of the day (1999).

The hashing functions that PBKDF2 uses were a lot easier to get speed increases due to ease of parallelisation as opposed to the Eksblowfish cipher attributes such as: far greater memory required for each hash, small and frequent pseudo-random memory accesses, making it harder to cache the data into faster memory. Now with hardware utilising large Field-programmable Gate Arrays (FPGAs), bcrypt brute-forcing is becoming more accessible due to easily obtainable cheap hardware such as:

The sensitive data stored within a data-store should be the output of using one of the three key derivation functions we have just discussed. Feed with the data you want protected and a salt. All good frameworks will have at least PBKDF2 and bcrypt APIs

bcrypt brute-forcing

With well ordered rainbow tables and hardware with high FPGA counts, brute-forcing bcrypt is now feasible:

Risks that Solution Causes

Reliance on adjacent layers of defence means those layers have to actually be up to scratch. There is a possibility that they will not be.

Possibility of missing secrets being sent over the wire.

Possible reliance on obscurity with many of the strategies I have seen proposed. Just be aware that obscurity may slow an attacker down a little, but it will not stop them.

Store Configuration in Configuration files

With moving any secrets from source code to configuration files, there is a possibility that the secrets will not be changed at the same time. If they are not changed, then you have not really helped much, as the secrets are still in source control.

With good configuration tools like node-config, you are provided with plenty of options of splitting up meta-data, creating overrides, storing different parts in different places, etc. There is a risk that you do not use the potential power and flexibility to your best advantage. Learn the ins and outs of what ever system it is you are using and leverage its features to do the best at obscuring your secrets and if possible securing them.

node-config

Is an excellent configuration package with lots of great features. There is no security provided with node-config, just some potential obscurity. Just be aware of that, and as discussed previously, make sure surrounding layers have beefed up security.

Windows

As is often the case with Microsoft solutions, their marketing often leads people to believe that they have secure solutions to problems when that is not the case. As discussed previously, there are plenty of ways to get around the Microsoft so called security features. As anything else in this space, they may provide some obscurity, but do not depend on them being secure.

Statements like the following have the potential for producing over confidence:

“vSentry protects desktops without requiring patches or updates, defeating and automatically discarding all known and unknown malware, and eliminating the need for costly remediation.”

Bromium

Please keep your systems patched and updated.

“With Bromium micro-virtualization, we now have an answer: A desktop that is utterly secure and a joy to use”

Bromium

There is a risk that people will believe this.

Linux

As with Microsofts “virtualisation-based security” Linux containers may slow system compromise down, but a determined attacker will find other ways to get around container isolation. Maintaining a small set of user accounts is a worthwhile practise, but that alone will not be enough to stop a highly skilled and determined attacker moving forward.

Even when technical security is very good, an experienced attacker will use other mediums to gain what they want, like social engineering, physical compromise, both, or some other attack vectors. Defence in depth is crucial in achieving good security. Concentrating on the lowest hanging fruit first and working your way up the tree.

Locking file permissions and ownership down is good, but that alone will not save you.

Least Privilege

Applying least privilege to everything can take quite a bit of work. Yes, it is probably not that hard to do, but does require a breadth of thought and time. Some of the areas discussed could be missed. Having more than one person working on the task is often effective as each person can bounce ideas off of each other and the other person is likely to notice areas that you may have missed and visa-versa.

Location

Segmentation is useful, and a common technique to helping to build resistance against attacks. It does introduce some complexity though. With complexity comes the added likely-hood of introducing a fault.

Data-store Compromise

If you follow the advice in the countermeasures section, you will be doing more than most other organisations in this area. It is not hard, but if implemented could increase complacency/over confidence. Always be on your guard. Always expect that although you have done a lot to increase your security stance, a determined and experienced attacker is going to push buttons you may have never realised you had. If they want something enough and have the resources and determination to get it, they probably will. This is where you need strategies in place to deal with post compromise. Create process (ideally partly automated) to deal with theft.

Also consider that once an attacker has made off with your data-store, even if it is currently infeasible to brute-force the secrets, there may be other ways around obtaining the missing pieces of information they need. Think about the paper shredders and the associated competitions. With patience, most puzzles can be cracked. If the compromise is an opportunistic type of attack, they will most likely just give up and seek an easier target. If it is a targeted attack by determined and experienced attackers, they will probably try other attack vectors until they get what they want.

Do not let over confidence be your weakness. An attacker will search out the weak link. Do your best to remove weak links.

Costs and Trade-offs

There is potential for hidden costs here, as adjacent layers will need to be hardened. There could be trade-offs here that force us to focus on the adjacent layers. This is never a bad thing though. It helps us to step back and take a holistic view of our security.

Store Configuration in Configuration files

There should be little cost in moving secrets out of source code and into configuration files.

Windows

You will need to weigh up whether the effort to obfuscate secrets is worth it or not. It can also make the developers job more cumbersome. Some of the options provided may be worthwhile doing.

Linux

Containers have many other advantages and you may already be using them for making your deployment processes easier and less likely to have dependency issues. They also help with scaling and load balancing, so they have multiple benefits.

Least Privilege

Is something you should be at least considering and probably doing in every case. It is one of those considerations that is worth while applying to most layers.

Location

Segmenting of resources is a common and effective measure to take for at least slowing down attacks and a cost well worth considering if you have not already.

Data-store Compromise

The countermeasures discussed here go without saying, although many organisations do not do them well if at all. It is up to you whether you want to be one of the statistics that has all of their secrets revealed. Following the countermeasures here is something that just needs to be done if you have any data that is sensitive in your data-store(s).