As part of the ongoing work around preparing a Debian web server to host applications accessible from the WWW I performed some research, analysis, made decisions along the way and implemented a first stage logging strategy. I’ve done similar set-ups many times before, but thought it worth sharing my experience for all to learn something from it and/or provide input, recommendations, corrections to the process so we all get to improve.

The main system loggers I looked into

- GNU syslogd which I don’t think is being developed anymore? Correct me if I’m wrong. Most Linux distributions no longer ship with this. Only supports UDP. It’s also a bit lacking in features. From what I gather is single-threaded. I didn’t spend long looking at this as there wasn’t much point. The following two offerings are the main players.

- rsyslog: which ships with Debian and most other Linux distros now I believe. I like to do as little as possible and rsyslog fits this description for me. The documentation seems pretty good. Rainer Gerhards wrote rsyslog and his blog provides some good insights. Supports UDP, TCP. Can send over TLS. There is also the Reliable Event Logging Protocol (RELP) which Rainer created.

rsyslog is great at gathering, transporting, storing log messages and includes some really neat functionality for dividing the logs. It’s not designed to alert on logs. That’s where the likes of Simple Event Correlator (SEC) comes in. Rainer discusses why TCP isn’t as reliable as many think here. - syslog-ng: I didn’t spend to long here, as I didn’t see any features that I needed that were better than the default of rsyslog. Can correlate log messages, both real-time and off-line. Supports reliable and encrypted transport using TCP and TLS. message filtering, sorting, pre-processing, log normalisation.

There are are few comparisons around. Most of the ones I’ve seen are a bit biased and often out of date.

Aims

- Record events and have them securely transferred to another syslog server in real-time, or as close to it as possible, so that potential attackers don’t have time to modify them on the local system before they’re replicated to another location

- Reliability (resilience / ability to recover connectivity)

- Extensibility: ability to add more machines and be able to aggregate events from many sources on many machines

- Receive notifications from the upstream syslog server of specific events. No HIDS is going to remove the need to reinstall your system if you are not notified in time and an attacker plants and activates their root-kit.

- Receive notifications from the upstream syslog server of lack of events. The network is down for example.

Environmental Considerations

A couple of servers in the mix:

FreeNAS File Server

Recent versions can send their syslog events to a syslog server. With some work, it looks like FreeNAS can be setup to act as a syslog server.

pfSense Router

Can send log events, but only by UDP by the look of it.

Following are the two strategies that emerged. You can see by the detail that I went down the path of the first one initially. It was the path of least resistance / quickest to setup. I’m going to be moving away from papertrail toward strategy two. Mainly because I’ve had a few issues where messages have been getting lost that have been very hard to track down (I’ve spent over a week on it). As the sender, you have no insight into what papertrail is doing. The support team don’t provide a lot of insight into their service when you have to trouble-shoot things. They have been as helpful as they can be, but I’ve expressed concern around them being unable to trouble-shoot their own services.

Outcomes

Strategy One

Rsyslog, TCP, local queuing, TLS, papertrail for your syslog server (PT doesn’t support RELP, but say that’s because their clients haven’t seen any issues with reliability in using plain TCP over TLS with local queuing). My guess is they haven’t looked hard enough. I must be the first then. Beware!

As I was setting this up and watching both ends. We had an internet outage of just over an hour. At that stage we had very few events being generated, so it was trivial to verify both ends. I noticed that once the ISP’s router was back on-line and the events from the queue moved to papertrail, that there was in fact one missing.

Why did Rainer Gerhards create RELP if TCP with queues was good enough? That was a question that was playing on me for a while. In the end, it was obvious that TCP without RELP isn’t good enough.

At this stage it looks like the queues may loose messages. Rainer says things like “In rsyslog, every action runs on its own queue and each queue can be set to buffer data if the action is not ready. Of course, you must be able to detect that the action is not ready, which means the remote server is off-line. This can be detected with plain TCP syslog and RELP“, but it can be detected without RELP.

You can aggregate log files with rsyslog or by using papertrails remote_syslog daemon.

Alerting is available, including for inactivity of events.

Papertrails documentation is good and support is reasonable. Due to the huge amounts of traffic they have to deal with, they are unable to trouble-shoot any issues you may have. If you still want to go down the papertrail path, to get started, work through this which sets up your rsyslog to use UDP (specified in the /etc/rsyslog.conf by a single ampersand in front of the target syslog server). I want something more reliable than that, so I use two ampersands, which specifies TCP.

As we’re going to be sending our logs over the internet for now, we need TLS. Check papertrails CA server bundle for integrity:

curl https://papertrailapp.com/tools/papertrail-bundle.pem | md5sum

Should be: c75ce425e553e416bde4e412439e3d09

If all good throw the contents of that URL into a file called papertrail-bundle.pem.

Then scp the papertrail-bundle.pem into the web servers /etc dir. The command for that will depend on whether you’re already on the web server and you want to pull, or whether you’re somewhere else and want to push. Then make sure the ownership is correct on the pem file.

chown root:root papertrail-bundle.pem

install rsyslog-gnutls

apt-get install rsyslog-gnutls

Add the TLS config

$DefaultNetstreamDriverCAFile /etc/papertrail-bundle.pem # trust these CAs $ActionSendStreamDriver gtls # use gtls netstream driver $ActionSendStreamDriverMode 1 # require TLS $ActionSendStreamDriverAuthMode x509/name # authenticate by host-name $ActionSendStreamDriverPermittedPeer *.papertrailapp.com

to your /etc/rsyslog.conf. Create egress rule for your router to let traffic out to dest port 39871.

sudo service rsyslog restart

To generate a log message that uses your system syslogd config /etc/rsyslog.conf, run:

logger "hi"

should log “hi” to /var/log/messages and also to papertrail, but it wasn’t.

# Show a live update of the last 10 lines (by default) of /var/log/messages sudo tail -f [-n <number of lines to tail>] /var/log/messages

OK, so lets run rsyslog in config checking mode:

/usr/sbin/rsyslogd -f /etc/rsyslog.conf -N1

Output all good looks like:

rsyslogd: version <the version number>, config validation run (level 1), master config /etc/rsyslog.conf rsyslogd: End of config validation run. Bye.

Trouble-shooting

- https://www.loggly.com/docs/troubleshooting-rsyslog/

- http://help.papertrailapp.com/

- http://help.papertrailapp.com/kb/configuration/troubleshooting-remote-syslog-reachability/

/usr/sbin/rsyslogd -versionwill provide the installed version and supported features.

Which didn’t help a lot, as I don’t have telnet installed. I can’t ping from the DMZ as ICMP is not allowed out and I’m not going to install tcpdump or strace on a production server. The more you have running, the more surface area you have, the greater the opportunities to exploit.

So how do we tell if rsyslogd is actually running if it doesn’t appear to be doing anything useful?

pidof rsyslogd

or

/etc/init.d/rsyslog status

Showing which files rsyslogd has open can be useful:

lsof -p <rsyslogd pid>

or just combine the results of pidof rsyslogd…

sudo lsof -p $(pidof rsyslogd)

To start with I had a line like:

rsyslogd 3426 root 8u IPv4 9636 0t0 TCP <web server IP>:<sending port>->logs2.papertrailapp.com:39871 (SYN_SENT)

Which obviously showed rsyslogd‘s SYN packets were not getting through. I’ve had some discussion with Troy from PT support around the reliability of plain TCP over TLS without RELP. I think if the server is business critical, then strategy two “maybe” the better option. Troy has assured me that they’ve never had any issues with logs being lost due to lack of reliability with out RELP. Troy also pointed me to their recommended local queue options. After adding the queue tweaks and a rsyslogd restart, it resulted in:

rsyslogd 3615 root 8u IPv4 9766 0t0 TCP <web server IP>:<sending port>->logs2.papertrailapp.com:39871 (ESTABLISHED)

I could now see events in the papertrail web UI in real-time.

Socket Statistics (ss)(the better netstat) should also show the established connection.

By default papertrail accepts TCP over TLS (TLS encryption check-box on, Plain text check-box off) and UDP. So if your TLS isn’t setup properly, your events won’t be accepted by papertrail. I later confirmed this to be true.

Check that our Logs are Commuting over TLS

Now without installing anything on the web server or router, or physically touching the server sending packets to papertrail or the router. Using a switch (ubiquitous) rather than a hub. No wire tap or multi-network interfaced computer. No switch monitoring port available on expensive enterprise grade switches (along with the much needed access). We’re basically down to two approaches I can think of and I really couldn’t be bothered getting up out of my chair.

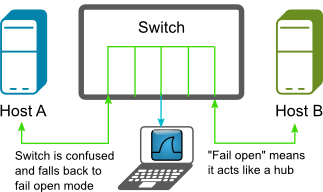

- MAC flooding with the help of macof which is a utility from the dsniff suite. This essentially causes your switch to go into a “failopen mode” where it acts like a hub and broadcasts it’s packets to every port.

–

Or…

– - Man in the Middle (MiTM) with some help from ARP spoofing or poisoning. I decided to choose the second option, as it’s a little more elegant.

–

On our MitM box, I set a static IP: address, netmask, gateway in /etc/network/interfaces and add domain, search and nameservers to the /etc/resolv.conf.

Follow that up with a service network-manager restart

On the web server run:

ifconfig -a

to get MAC: <MitM box MAC> On MitM box run the same command to get MAC: <web server MAC>

On web server run:

ip neighbour

to find MACs associated with IP’s (the local ARP table). Router was: <router MAC>.

myuser@webserver:~$ ip neighbour <MitM box IP> dev eth0 lladdr <MitM box MAC> REACHABLE <router IP> dev eth0 lladdr <router MAC> REACHABLE

Now you need to turn your MitM box into a router temporarily. On the MitM box run

cat /proc/sys/net/ipv4/ip_forward

You’ll see a ‘1’ if forwarding is on. If it’s not, throw a ‘1’ into the file:

echo 1 > /proc/sys/net/ipv4/ip_forward

and check again to make sure. Now on the MitM box run

arpspoof -t <web server IP> <router IP>

This will continue to notify <web server IP> that our (MitM box) MAC address belongs to <router IP>. Essentially… we (MitM box) are <router IP> to the <web server IP> box, but our IP address doesn’t change. Now on the web server you can see that it’s ARP table has been updated and because arpspoof keeps running, it keeps telling <web server IP> that our MitM box is the router.

myuser@webserver:~$ ip neighbour <MitM box IP> dev eth0 lladdr <MitM box MAC> STALE <router IP> dev eth0 lladdr <MitM box MAC> REACHABLE

Now on our MitM box, while our arpspoof continues to run, we start Wireshark listening on our eth0 interface or what ever interface your using, and you can see that all packets that the web server is sending, we are intercepting and forwarding (routing) on to the gateway.

Now Wireshark clearly showed that the data was encrypted. I commented out the five TLS config lines in the /etc/rsyslog.conf file -> saved -> restarted rsyslog -> turned on “Plain text” in papertrail and could now see the messages in clear text. Now when I turned off “Plain text” papertrail would no longer accept syslog events. Excellent!

One of the nice things about arpspoof is that it re-applies the original ARP’s once it’s done.

You can also tell arpspoof to poison the routers ARP table. This way any traffic going to the web server via the router, not originating from the web server will be routed through our MitM box also.

Don’t forget to revert the change to /proc/sys/net/ipv4/ip_forward.

Exporting Wireshark Capture

You can use the File->Save As… option here for a collection of output types, or the way I usually do it is:

- First completely expand all the frames you want visible in your capture file

- File->Export Packet Dissections->as “Plain Text” file…

- Check the “All packets” check-box

- Check the “Packet summary line” check-box

- Check the “Packet details:” check-box and the “As displayed”

- OK

Trouble-shooting messages that papertrail never shows

To run rsyslogd in debug

Check to see which arguments get passed into rsyslogd to run as a daemon in /etc/init.d/rsyslog and /etc/default/rsyslog. You’ll probably see a RSYSLOGD_OPTIONS="". There may be some arguments between the quotes.

sudo service rsyslog stop sudo /usr/sbin/rsyslogd [your options here] -dn >> ~/rsyslog-debug.log

The debug log can be quite useful for trouble-shooting. Also keep your eye on the stderr as you can see if it’s writing anything out (most system start-up scripts throw this away).

Once you’ve finished collecting log:

ctrl+C

sudo service rsyslog start

To see if rsyslog is running

pidof rsyslogd # or /etc/init.d/rsyslog status

Turn on the impstats module

The stats it produces show when you run into errors with an output, and also the state of the queues.

You can also run impstats on the receiving machine if it’s in your control. Papertrail obviously is not.

Put the following into your rsyslog.conf file at the top and restart rsyslog:

# Turn on some internal counters to trouble-shoot missing messages module(load="impstats" interval="600" severity="7" log.syslog="off" # need to turn log stream logging off log.file="/var/log/rsyslog-stats.log") # End turn on some internal counters to trouble-shoot missing messages

Now if you get an error like:

rsyslogd-2039: Could not open output pipe '/dev/xconsole': No such file or directory [try http://www.rsyslog.com/e/2039 ]

You can just change the /dev/xconsole to /dev/console

xconsole is still in the config file for legacy reasons, it should have been cleaned up by the package maintainers.

GnuTLS error in rsyslog-debug.log

By running rsyslogd manually in debug mode, I found an error when the message failed to send:

unexpected GnuTLS error -53 in nsd_gtls.c:1571

Standard Error when running rsyslogd manually produces:

GnuTLS error: Error in the push function

With some help from the GnuTLS mailing list:

“That means that send() returned -1 for some reason.” You can enable more output by adding an environment variable GNUTLS_DEBUG_LEVEL=9 prior to running the application, and that should at least provide you with the errno. This didn’t actually provide any more detail to stderr. However, thanks to Rainer we do now have debug.gnutls parameter in the rsyslog code that if you specify this global variable in the rsyslog.conf and assign it a value between 0-10 you’ll have gnutls debug output going to rsyslog’s debug log.

Strategy Two

Rsyslog, TCP, local queuing, TLS, RELP, SEC, syslog server on local network. Notification for inactivity of events could be performed by cron and SEC?

LogAnalyzer also created by Rainer Gerhards (rsyslog author), but more work to setup than an on-line service you don’t have to setup. In saying that. You would have greater control and security which for me is the big win here.

Normalisation also looks like Rainer has his finger in this pie.

In theory Adding RELP to TCP with local queues is a step-up in terms of reliability. Others have said, the reliability of TCP over TLS with local queues is excellent anyway. I’ve yet to confirm it’s excellence. At the time of writing this post,I’m seriously considering moving toward RELP to help solve my reliability issues.