Just a heads up before we get started on this… Chrome DevTools (v35) now has the ability to show the full call stack of asynchronous JavaScript callbacks. As of writing this, if you develop on Linux you’ll want the dev channel. Currently my Linux Mint 13 is 3 versions behind. So I had to update to the dev channel until I upgraded to the LTS 17 (Qiana).

All code samples can be found at GitHub.

Deep Callback Nesting

AKA callback hell, temple of doom, often the functions that are nested are anonymous and often they are implicit closures. When it comes to asynchronicity in JavaScript, callbacks are our bread and butter. In saying that, often the best way to use them is by abstracting them behind more elegant APIs.

Being aware of when new functions are created and when you need to make sure the memory being held by closure is released (dropped out of scope) can be important for code that’s hot otherwise you’re in danger of introducing subtle memory leaks.

What is it?

Passing functions as arguments to functions which return immediately. The function (callback) that’s passed as an argument will be run at some time in the future when the potentially time expensive operation is done. This callback by convention has it’s first parameter as the error on error, or as null on success of the expensive operation. In JavaScript we should never block on potentially time expensive operations such as I/O, network operations. We only have one thread in JavaScript, so we allow the JavaScript implementations to place our discrete operations on the event queue.

One other point I think that’s worth mentioning is that we should never call asynchronous callbacks synchronously unless of course we’re unit testing them, in which case we should be rarely calling them asynchronously. Always allow the JavaScript engine to put the callback into the event queue rather than calling it immediately, even if you already have the result to pass to the callback. By ensuring the callback executes on a subsequent turn of the event loop you are providing strict separation of the callback being allowed to change data that’s shared between itself (usually via closure) and the currently executing function. There are many ways to ensure the callback is run on a subsequent turn of the event loop. Using asynchronous API’s like setTimeout and setImmediate allow you to schedule your callback to run on a subsequent turn. The Promises/A+ specification (discussed below) for example specifies this.

The Test

var assert = require('assert');

var should = require('should');

var requireFrom = require('requirefrom');

var sUTDirectory = requireFrom('post/nodejsAsynchronicityAndCallbackNesting');

var nestedCoffee = sUTDirectory('nestedCoffee');

describe('nodejsAsynchronicityAndCallbackNesting post integration test suite', function (done) {

// if you don't want to wait for the machine to heat up assign minutes: 2.

var minutes = 32;

this.timeout(60000 * minutes);

it('Test the ugly nested callback coffee machine', function (done) {

var result = function (error, state) {

var stateOutCome;

var expectedErrorOutCome = null;

if(!error) {

stateOutCome = 'The state of the ordered coffee is: ' + state.description;

stateOutCome.should.equal('The state of the ordered coffee is: beautiful shot!');

} else {

assert.fail(error, expectedErrorOutCome, 'brew encountered an error. The following are the error details: ' + error.message);

}

done();

};

nestedCoffee().brew(result);

});

});

The System Under Test

'use strict';

module.exports = function nestedCoffee() {

// We don't do instant coffee ####################################

var boilJug = function () {

// Perform long running action, delegating async tasks passing callback and returning immediately.

};

var addInstantCoffeePowder = function () {

// Perform long running action, delegating async tasks passing callback and returning immediately.

console.log('Crappy instant coffee powder is being added.');

};

var addSugar = function () {

// Perform long running action, delegating async tasks passing callback and returning immediately.

console.log('Sugar is being added.');

};

var addBoilingWater = function () {

// Perform long running action, delegating async tasks passing callback and returning immediately.

console.log('Boiling water is being added.');

};

var stir = function () {

// Perform long running action, delegating async tasks passing callback and returning immediately.

console.log('Coffee is being stirred. Hmm...');

};

// We only do real coffee ########################################

var heatEspressoMachine = function (state, callback) {

var error = undefined;

var wrappedCallback = function () {

console.log('Espresso machine heating cycle is done.');

if(!error) {

callback(error, state);

} else

console.log('wrappedCallback encountered an error. The following are the error details: ' + error);

};

// Flick switch, check water.

console.log('Espresso machine has been turned on and is now heating.');

// Mutate state.

// If there is an error, wrap callback with our own error function

// Even if you call setTimeout with a time of 0 ms, the callback you pass is placed on the event queue to be called on a subsequent turn of the event loop.

// Also be aware that setTimeout has a minimum granularity of 4ms for timers nested more than 5 deep. For several reasons we prefer to use setImmediate if we don't want a 4ms minimum wait.

// setImmediate will schedule your callbacks on the next turn of the event loop, but it goes about it in a smarter way. Read more about it here: https://developer.mozilla.org/en-US/docs/Web/API/Window.setImmediate

// If you are using setImmediate and it's not available in the browser, use the polyfill: https://github.com/YuzuJS/setImmediate

// For this, we need to wait for our huge hunk of copper to heat up, which takes a lot longer than a few milliseconds.

setTimeout(

// Once espresso machine is hot callback will be invoked on the next turn of the event loop...

wrappedCallback, espressoMachineHeatTime.milliseconds

);

};

var grindDoseTampBeans = function (state, callback) {

// Perform long running action.

console.log('We are now grinding, dosing, then tamping our dose.');

// To save on writing large amounts of code, the callback would get passed to something that would run it at some point in the future.

// We would then return immediately with the expectation that callback will be run in the future.

callback(null, state);

};

var mountPortaFilter = function (state, callback) {

// Perform long running action.

console.log('Porta filter is now being mounted.');

// To save on writing large amounts of code, the callback would get passed to something that would run it at some point in the future.

// We would then return immediately with the expectation that callback will be run in the future.

callback(null, state);

};

var positionCup = function (state, callback) {

// Perform long running action.

console.log('Placing cup under portafilter.');

// To save on writing large amounts of code, the callback would get passed to something that would run it at some point in the future.

// We would then return immediately with the expectation that callback will be run in the future.

callback(null, state);

};

var preInfuse = function (state, callback) {

// Perform long running action.

console.log('10 second preinfuse now taking place.');

// To save on writing large amounts of code, the callback would get passed to something that would run it at some point in the future.

// We would then return immediately with the expectation that callback will be run in the future.

callback(null, state);

};

var extract = function (state, callback) {

// Perform long running action.

console.log('Cranking leaver down and extracting pure goodness.');

state.description = 'beautiful shot!';

// To save on writing large amounts of code, the callback would get passed to something that would run it at some point in the future.

// We would then return immediately with the expectation that callback will be run in the future.

// Uncomment the below to test the error.

//callback({message: 'Oh no, something has gone wrong!'})

callback(null, state);

};

var espressoMachineHeatTime = {

// if you don't want to wait for the machine to heat up assign minutes: 0.2.

minutes: 30,

get milliseconds() {

return this.minutes * 60000;

}

};

var state = {

description: ''

// Other properties

};

var brew = function (onCompletion) {

// Some prep work here possibly.

heatEspressoMachine(state, function (err, resultFromHeatEspressoMachine) {

if(!err) {

grindDoseTampBeans(state, function (err, resultFromGrindDoseTampBeans) {

if(!err) {

mountPortaFilter(state, function (err, resultFromMountPortaFilter) {

if(!err) {

positionCup(state, function (err, resultFromPositionCup) {

if(!err) {

preInfuse(state, function (err, resultFromPreInfuse) {

if(!err) {

extract(state, function (err, resultFromExtract) {

if(!err)

onCompletion(null, state);

else

onCompletion(err, null);

});

} else

onCompletion(err, null);

});

} else

onCompletion(err, null);

});

} else

onCompletion(err, null);

});

} else

onCompletion(err, null);

});

} else

onCompletion(err, null);

});

};

return {

// Publicise brew.

brew: brew

};

};

What’s wrong with it?

- It’s hard to read, reason about and maintain

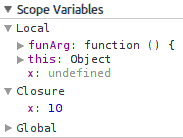

- The debugging experience isn’t very informative

- It creates more garbage than adding your functions to a prototype

- Dangers of leaking memory due to retaining closure references

- Many more…

What’s right with it?

Closures are one of the language features in JavaScript that they got right. There are often issues in how we use them though. Be very careful of what you’re doing with closures. If you’ve got hot code, don’t create a new function every time you want to execute it.

Resources

- Chapter 7 Concurrency of the Effective JavaScript book by David Herman

Alternative Approaches

Ranging from marginally good approaches to better approaches. Keeping in mind that all these techniques add value and some make more sense in some situations than others. They are all approaches for making the callback hell more manageable and often encapsulating it completely, so much so that the underlying workings are no longer just a bunch of callbacks but rather well thought out implementations offering up a consistent well recognised API. Try them all, get used to them all, then pick the one that suites your particular situation. The first two examples from here are blocking though, so I wouldn’t use them as they are, they are just an example of how to make some improvements.

Name your anonymous functions

- They’ll be easier to read and understand

- You’ll get a much better debugging experience, as stack traces will reference named functions rather than “anonymous function”

- If you want to know where the source of an exception was

- Reveals your intent without adding comments

- In itself will allow you to keep your nesting shallow

- A first step to creating more extensible code

We’ve made some improvements in the next two examples, but introduced blocking in the arrays prototypes forEach loop which we really don’t want to do.

Example of Anonymous Functions

var boilJug = function () {

// Perform long running action

};

var addInstantCoffeePowder = function () {

// Perform long running action

console.log('Crappy instant coffee powder is being added.');

};

var addSugar = function () {

// Perform long running action

console.log('Sugar is being added.');

};

var addBoilingWater = function () {

// Perform long running action

console.log('Boiling water is being added.');

};

var stir = function () {

// Perform long running action

console.log('Coffee is being stirred. Hmm...');

};

var heatEspressoMachine = function () {

// Flick switch, check water.

console.log('Espresso machine is being turned on and is now heating.');

};

var grindDoseTampBeans = function () {

// Perform long running action

console.log('We are now grinding, dosing, then tamping our dose.');

};

var mountPortaFilter = function () {

// Perform long running action

console.log('Portafilter is now being mounted.');

};

var positionCup = function () {

// Perform long running action

console.log('Placing cup under portafilter.');

};

var preInfuse = function () {

// Perform long running action

console.log('10 second preinfuse now taking place.');

};

var extract = function () {

// Perform long running action

console.log('Cranking leaver down and extracting pure goodness.');

};

(function () {

// Array.prototype.forEach executes your callback synchronously (that's right, it's blocking) for each element of the array.

return [

'heatEspressoMachine',

'grindDoseTampBeans',

'mountPortaFilter',

'positionCup',

'preInfuse',

'extract',

].forEach(

function (brewStep) {

this[brewStep]();

}

);

}());

Example of Named Functions

Now satisfies all the points above, providing the same output. Hopefully you’ll be able to see a few other issues I’ve addressed with this example. We’re also no longer clobbering the global scope. We can now also make any of the other types of coffee simply with an additional single line function call, so we’re removing duplication.

var BINARYMIST = (function (binMist) {

binMist.coffee = {

action: function (step) {

return {

boilJug: function () {

// Perform long running action

},

addInstantCoffeePowder: function () {

// Perform long running action

console.log('Crappy instant coffee powder is being added.');

},

addSugar: function () {

// Perform long running action

console.log('Sugar is being added.');

},

addBoilingWater: function () {

// Perform long running action

console.log('Boiling water is being added.');

},

stir: function () {

// Perform long running action

console.log('Coffee is being stirred. Hmm...');

},

heatEspressoMachine: function () {

// Flick switch, check water.

console.log('Espresso machine is being turned on and is now heating.');

},

grindDoseTampBeans: function () {

// Perform long running action

console.log('We are now grinding, dosing, then tamping our dose.');

},

mountPortaFilter: function () {

// Perform long running action

console.log('Portafilter is now being mounted.');

},

positionCup: function () {

// Perform long running action

console.log('Placing cup under portafilter.');

},

preInfuse: function () {

// Perform long running action

console.log('10 second preinfuse now taking place.');

},

extract: function () {

// Perform long running action

console.log('Cranking leaver down and extracting pure goodness.');

}

}[step]();

},

coffeeType: function (type) {

return {

'cappuccino': {

brewSteps: function () {

return [

// Lots of actions

];

}

},

'instant': {

brewSteps: function () {

return [

'addInstantCoffeePowder',

'addSugar',

'addBoilingWater',

'stir'

];

}

},

'macchiato': {

brewSteps: function () {

return [

// Lots of actions

];

}

},

'mocha': {

brewSteps: function () {

return [

// Lots of actions

];

}

},

'short black': {

brewSteps: function () {

return [

'heatEspressoMachine',

'grindDoseTampBeans',

'mountPortaFilter',

'positionCup',

'preInfuse',

'extract',

];

}

}

}[type];

},

'brew': function (requestedCoffeeType) {

var that = this;

var brewSteps = this.coffeeType(requestedCoffeeType).brewSteps();

// Array.prototype.forEach executes your callback synchronously (that's right, it's blocking) for each element of the array.

brewSteps.forEach(function runCoffeeMakingStep(brewStep) {

that.action(brewStep);

});

}

};

return binMist;

} (BINARYMIST || {/*if BINARYMIST is falsy, create a new object and pass it*/}));

BINARYMIST.coffee.brew('short black');

Web Workers

I’ll address these in another post.

Create Modules

Everywhere.

CommonJS type Modules in Node.js

In most of the examples I’ve created in this post I’ve exported the system under test (SUT) modules and then required them into the test. Node modules are very easy to create and consume. requireFrom is a great way to require your local modules without explicit directory traversal, thus removing the need to change your require statements when you move your files that are requiring your modules.

NPM Packages

Here we get to consume npm packages in the browser.

Universal Module Definition (UMD)

ES6 Modules

That’s right, we’re getting modules as part of the specification (15.2). Check out this post by Axel Rauschmayer to get you started.

Recursion

I’m not going to go into this here, but recursion can be used as a light weight solution to provide some logic to determine when to run the next asynchronous piece of work. Item 64 “Use Recursion for Asynchronous Loops” of the Effective JavaScript book provides some great examples. Do your self a favour and get a copy of David Herman’s book. Oh, we’re also getting tail-call optimisation in ES6.

|

Still creates more garbage unless your functions are on the prototype, but does provide asynchronicity. Now we can put our functions on the prototype, but then they’ll all be public and if they’re part of a process then we don’t want our coffee process spilling all it’s secretes about how it makes perfect coffee. In saying that, if our code is hot and we’ve profiled it and it’s a stand-out for using to much memory, we could refactor EventEmittedCoffee to have its function declarations added to EventEmittedCoffee.prototype and perhaps hidden another way, but I wouldn’t worry about it until it’s been proven to be using to much memory.

Events are used in the well known Ganf Of Four Observer (behavioural) pattern (which I discussed the C# implementation of here) and at a higher level the Enterprise Integration Publish/Subscribe pattern. The Observer pattern is used in quite a few other patterns also. The ones that spring to mind are Model View Presenter, Model View Controller. The pub/sub pattern is slightly different to the Observer in that it has a topic/event channel that sits between the publisher and the subscriber and it uses contractual messages to encapsulate and transmit it’s events.

Here’s an example of the EventEmitter …

The Test

var assert = require('assert');

var should = require('should');

var requireFrom = require('requirefrom');

var sUTDirectory = requireFrom('post/nodejsAsynchronicityAndCallbackNesting');

var eventEmittedCoffee = sUTDirectory('eventEmittedCoffee');

describe('nodejsAsynchronicityAndCallbackNesting post integration test suite', function () {

// if you don't want to wait for the machine to heat up assign minutes: 2.

var minutes = 32;

this.timeout(60000 * minutes);

it('Test the event emitted coffee machine', function (done) {

function handleSuccess(state) {

var stateOutCome = 'The state of the ordered coffee is: ' + state.description;

stateOutCome.should.equal('The state of the ordered coffee is: beautiful shot!');

done();

}

function handleFailure(error) {

assert.fail(error, 'brew encountered an error. The following are the error details: ' + error.message);

done();

}

// We could even assign multiple event handlers to the same event. We're not here, but we could.

eventEmittedCoffee.on('successfulOrder', handleSuccess).on('failedOrder', handleFailure);

eventEmittedCoffee.brew();

});

});

The System Under Test

'use strict';

var events = require('events'); // Core node module.

var util = require('util'); // Core node module.

var eventEmittedCoffee;

var espressoMachineHeatTime = {

// if you don't want to wait for the machine to heat up assign minutes: 0.2.

minutes: 30,

get milliseconds() {

return this.minutes * 60000;

}

};

var state = {

description: '',

// Other properties

error: ''

};

function EventEmittedCoffee() {

var eventEmittedCoffee = this;

function heatEspressoMachine(state) {

// No need for callbacks. We can emit a failedOrder event at any stage and any subscribers will be notified.

function emitEspressoMachineHeated() {

console.log('Espresso machine heating cycle is done.');

eventEmittedCoffee.emit('espressoMachineHeated', state);

}

// Flick switch, check water.

console.log('Espresso machine has been turned on and is now heating.');

// Mutate state.

setTimeout(

// Once espresso machine is hot event will be emitted on the next turn of the event loop...

emitEspressoMachineHeated, espressoMachineHeatTime.milliseconds

);

}

function grindDoseTampBeans(state) {

// Perform long running action, delegating async tasks passing callback and returning immediately.

console.log('We are now grinding, dosing, then tamping our dose.');

eventEmittedCoffee.emit('groundDosedTampedBeans', state);

}

function mountPortaFilter(state) {

// Perform long running action, delegating async tasks passing callback and returning immediately.

console.log('Porta filter is now being mounted.');

eventEmittedCoffee.emit('portaFilterMounted', state);

}

function positionCup(state) {

// Perform long running action, delegating async tasks passing callback and returning immediately.

console.log('Placing cup under portafilter.');

eventEmittedCoffee.emit('cupPositioned', state);

}

function preInfuse(state) {

// Perform long running action, delegating async tasks passing callback and returning immediately.

console.log('10 second preinfuse now taking place.');

eventEmittedCoffee.emit('preInfused', state);

}

function extract(state) {

// Perform long running action, delegating async tasks passing callback and returning immediately.

console.log('Cranking leaver down and extracting pure goodness.');

state.description = 'beautiful shot!';

eventEmittedCoffee.emit('successfulOrder', state);

// If you want to fail the order, replace the above two lines with the below two lines.

// state.error = 'Oh no! That extraction came out far to fast.'

// this.emit('failedOrder', state);

}

eventEmittedCoffee.on('timeToHeatEspressoMachine', heatEspressoMachine).

on('espressoMachineHeated', grindDoseTampBeans).

on('groundDosedTampedBeans', mountPortaFilter).

on('portaFilterMounted', positionCup).

on('cupPositioned', preInfuse).

on('preInfused', extract);

}

// Make sure util.inherits is before any prototype augmentations, as it seems it clobbers the prototype if it's the other way around.

util.inherits(EventEmittedCoffee, events.EventEmitter);

// Only public method.

EventEmittedCoffee.prototype.brew = function () {

this.emit('timeToHeatEspressoMachine', state);

};

eventEmittedCoffee = new EventEmittedCoffee();

module.exports = eventEmittedCoffee;

With using raw callbacks, we have to pass them (functions) around. With events, we can have many interested parties request (subscribe) to be notified when something that our interested parties are interested in happens (the event). The Observer pattern promotes loose coupling, as the thing (publisher) wanting to inform interested parties of specific events has no knowledge of it’s subscribers, this is essentially what a service is.

Resources

Provides a collection of methods on the async object that:

- take an array and perform certain actions on each element asynchronously

- take a collection of functions to execute in specific orders asynchronously, some based on different criteria. The likes of

async.waterfall allow you to pass results of a previous function to the next. Don’t underestimate these. There are a bunch of very useful routines.

- are asynchronous utilities

Here’s an example…

The Test

var assert = require('assert');

var should = require('should');

var requireFrom = require('requirefrom');

var sUTDirectory = requireFrom('post/nodejsAsynchronicityAndCallbackNesting');

var asyncCoffee = sUTDirectory('asyncCoffee');

describe('nodejsAsynchronicityAndCallbackNesting post integration test suite', function () {

// if you don't want to wait for the machine to heat up assign minutes: 2.

var minutes = 32;

this.timeout(60000 * minutes);

it('Test the async coffee machine', function (done) {

var result = function (error, resultsFromAllAsyncSeriesFunctions) {

var stateOutCome;

var expectedErrorOutCome = null;

if(!error) {

stateOutCome = 'The state of the ordered coffee is: '

+ resultsFromAllAsyncSeriesFunctions[resultsFromAllAsyncSeriesFunctions.length - 1].description;

stateOutCome.should.equal('The state of the ordered coffee is: beautiful shot!');

} else {

assert.fail(

error,

expectedErrorOutCome,

'brew encountered an error. The following are the error details. message: '

+ error.message

+ '. The finished state of the ordered coffee is: '

+ resultsFromAllAsyncSeriesFunctions[resultsFromAllAsyncSeriesFunctions.length - 1].description

);

}

done();

};

asyncCoffee().brew(result)

});

});

The System Under Test

'use strict';

var async = require('async');

var espressoMachineHeatTime = {

// if you don't want to wait for the machine to heat up assign minutes: 0.2.

minutes: 30,

get milliseconds() {

return this.minutes * 60000;

}

};

var state = {

description: '',

// Other properties

error: null

};

module.exports = function asyncCoffee() {

var brew = function (onCompletion) {

async.series([

function heatEspressoMachine(heatEspressoMachineDone) {

// No need for callbacks. We can just pass an error to the async supplied callback at any stage and the onCompletion callback will be invoked with the error and the results immediately.

function espressoMachineHeated() {

console.log('Espresso machine heating cycle is done.');

heatEspressoMachineDone(state.error);

}

// Flick switch, check water.

console.log('Espresso machine has been turned on and is now heating.');

// Mutate state.

setTimeout(

// Once espresso machine is hot, heatEspressoMachineDone will be invoked on the next turn of the event loop...

espressoMachineHeated, espressoMachineHeatTime.milliseconds

);

},

function grindDoseTampBeans(grindDoseTampBeansDone) {

// Perform long running action, delegating async tasks passing callback and returning immediately.

console.log('We are now grinding, dosing, then tamping our dose.');

grindDoseTampBeansDone(state.error);

},

function mountPortaFilter(mountPortaFilterDone) {

// Perform long running action, delegating async tasks passing callback and returning immediately.

console.log('Porta filter is now being mounted.');

mountPortaFilterDone(state.error);

},

function positionCup(positionCupDone) {

// Perform long running action, delegating async tasks passing callback and returning immediately.

console.log('Placing cup under portafilter.');

positionCupDone(state.error);

},

function preInfuse(preInfuseDone) {

// Perform long running action, delegating async tasks passing callback and returning immediately.

console.log('10 second preinfuse now taking place.');

preInfuseDone(state.error);

},

function extract(extractDone) {

// Perform long running action, delegating async tasks passing callback and returning immediately.

console.log('Cranking leaver down and extracting pure goodness.');

// If you want to fail the order, uncomment the below line. May as well change the description too.

// state.error = {message: 'Oh no! That extraction came out far to fast.'};

state.description = 'beautiful shot!';

extractDone(state.error, state);

}

],

onCompletion);

};

return {

// Publicise brew.

brew: brew

};

};

Other Similar Useful libraries

Adding to Prototype

Check out my post on prototypes. If profiling reveals you’re spending to much memory or processing time creating the objects that contain the functions that are going to be used asynchronously you could add the functions to the objects prototype like we did with the public brew method of the EventEmitter example above.

Promises

The concepts of promises and futures which are quite similar, have been around a long time. their roots go back to 1976 and 1977 respectively. Often the terms are used interchangeably, but they are not the same thing. You can think of the language agnostic promise as a proxy for a value provided by an asynchronous actions eventual success or failure. a promise is something tangible, something you can pass around and interact with… all before or after it’s resolved or failed. The abstract concept of the future (discussed below) has a value that can be mutated once from pending to either fulfilled or rejected on fulfilment or rejection of the promise.

Promises provide a pattern that abstracts asynchronous operations in code thus making them easier to reason about. Promises which abstract callbacks can be passed around and the methods on them chained (AKA Promise pipelining). Removing temporary variables makes it more concise and clearer to readers that the extra assignments are an unnecessary step.

JavaScript Promises

A promise (Promises/A+ thenable) is an object or sometimes more specifically a function with a then (JavaScript specific) method.

A promise must only change it’s state once and can only change from either pending to fulfilled or pending to rejected.

Semantically a future is a read-only property.

A future can only have its value set (sometimes called resolved, fulfilled or bound) once by one or more (via promise pipelining) associated promises.

Futures are not discussed explicitly in the Promises/A+, although are discussed implicitly in the promise resolution procedure which takes a promise as the first argument and a value as the second argument.

The idea is that the promise (first argument) adopts the state of the second argument if the second argument is a thenable (a promise object with a then method). This procedure facilitates the concept of the “future”

We’re getting promises in ES6. That means JavaScript implementers are starting to include them as part of the language. Until we get there, we can use the likes of these libraries.

One of the first JavaScript promise drafts was the Promises/A (1) proposal. The next stage in defining a standardised form of promises for JavaScript was the Promises/A+ (2) specification which also has some good resources for those looking to use and implement promises based on the new spec. Just keep in mind though, that this has nothing to do with the EcmaScript specification, although it is/was a precursor.

Then we have Dominic Denicola’s promises-unwrapping repository (3) for those that want to stay ahead of the solidified ES6 draft spec (4). Dominic’s repo is slightly less specky and may be a little more convenient to read, but if you want gospel, just go for the ES6 draft spec sections 25.4 Promise Objects and 7.5 Operations on Promise Objects which is looking fairly solid now.

The 1, 2, 3 and 4 are the evolutionary path of promises in JavaScript.

Node Support

Although it was decided to drop promises from Node core, we’re getting them in ES6 anyway. V8 already supports the spec and we also have plenty of libraries to choose from.

Node on the other hand is lagging. Node stable 0.10.29 still looks to be using version 3.14.5.9 of V8 which still looks to be about 17 months from the beginnings of the first sign of native ES6 promises according to how I’m reading the V8 change log and the Node release notes.

So to get started using promises in your projects whether your programming server or client side, you can:

- Use one of the excellent Promises/A+ conformant libraries which will give you the flexibility of lots of features if that’s what you need, or

- Use the native browser promise API of which all ES6 methods on Promise work in Chrome (V8 -> Soon in Node), Firefox and Opera. Then polyfill using the likes of yepnope, or just check the existence of the methods you require and load them on an as needed basis. The cujojs or jakearchibald shims would be good starting points.

For my examples I’ve decided to use when.js for several reasons.

- Currently in Node we have no native support. As stated above, this will be changing soon, so we’d be polyfilling everything.

- It’s performance is the least worst of the Promises/A+ compliant libraries at this stage. Although don’t get to hung up on perf stats. In most cases they won’t matter in context of your module. If you’re concerned, profile your running code.

- It wraps non Promises/A+ compliant promise look-a-likes like jQuery’s Deferred which will forever remain broken.

- Is compliant with spec version 1.1

The following example continues with the coffee making procedure concept. Now we’ve taken this from raw callbacks to using the EventEmitter to using the Async library and finally to what I think is the best option for most of our asynchronous work, not only in Node but JavaScript anywhere. Promises. Now this is just one way to implement the example. There are many and probably many of which are even more elegant. Go forth explore and experiment.

The Test

var should = require('should');

var requireFrom = require('requirefrom');

var sUTDirectory = requireFrom('post/nodejsAsynchronicityAndCallbackNesting');

var promisedCoffee = sUTDirectory('promisedCoffee');

describe('nodejsAsynchronicityAndCallbackNesting post integration test suite', function () {

// if you don't want to wait for the machine to heat up assign minutes: 2.

var minutes = 32;

this.timeout(60000 * minutes);

it('Test the coffee machine of promises', function (done) {

var numberOfSteps = 7;

// We could use a then just as we've used the promises done method, but done is semantically the better choice. It makes a bigger noise about handling errors. Read the docs for more info.

promisedCoffee().brew().done(

function handleValue(valueOrErrorFromPromiseChain) {

console.log(valueOrErrorFromPromiseChain);

valueOrErrorFromPromiseChain.errors.should.have.length(0);

valueOrErrorFromPromiseChain.stepResults.should.have.length(numberOfSteps);

done();

}

);

});

});

The System Under Test

'use strict';

var when = require('when');

var espressoMachineHeatTime = {

// if you don't want to wait for the machine to heat up assign minutes: 0.2.

minutes: 30,

get milliseconds() {

return this.minutes * 60000;

}

};

var state = {

description: '',

// Other properties

errors: [],

stepResults: []

};

function CustomError(message) {

this.message = message;

// return false

return false;

}

function heatEspressoMachine(resolve, reject) {

state.stepResults.push('Espresso machine has been turned on and is now heating.');

function espressoMachineHeated() {

var result;

// result will be wrapped in a new promise and provided as the parameter in the promises then methods first argument.

result = 'Espresso machine heating cycle is done.';

// result could also be assigned another promise

resolve(result);

// Or call the reject

//reject(new Error('Something screwed up here')); // You'll know where it originated from. You'll get full stack trace.

}

// Flick switch, check water.

console.log('Espresso machine has been turned on and is now heating.');

// Mutate state.

setTimeout(

// Once espresso machine is hot, heatEspressoMachineDone will be invoked on the next turn of the event loop...

espressoMachineHeated, espressoMachineHeatTime.milliseconds

);

}

// The promise takes care of all the asynchronous stuff without a lot of thought required.

var promisedCoffee = when.promise(heatEspressoMachine).then(

function fulfillGrindDoseTampBeans(result) {

state.stepResults.push(result);

// Perform long running action, delegating async tasks passing callback and returning immediately.

return 'We are now grinding, dosing, then tamping our dose.';

// Or if something goes wrong:

// throw new Error('Something screwed up here'); // You'll know where it originated from. You'll get full stack trace.

},

function rejectGrindDoseTampBeans(error) {

// Deal with the error. Possibly augment some additional insight and re-throw.

if(state.errors[state.errors.length -1] !== error.message)

state.errors.push(error.message);

throw new CustomError(error.message);

}

).then(

function fulfillMountPortaFilter(result) {

state.stepResults.push(result);

// Perform long running action, delegating async tasks passing callback and returning immediately.

return 'Porta filter is now being mounted.';

},

function rejectMountPortaFilter(error) {

// Deal with the error. Possibly augment some additional insight and re-throw.

if(state.errors[state.errors.length -1] !== error.message)

state.errors.push(error.message);

throw new Error(error.message);

}

).then(

function fulfillPositionCup(result) {

state.stepResults.push(result);

// Perform long running action, delegating async tasks passing callback and returning immediately.

return 'Placing cup under portafilter.';

},

function rejectPositionCup(error) {

// Deal with the error. Possibly augment some additional insight and re-throw.

if(state.errors[state.errors.length -1] !== error.message)

state.errors.push(error.message);

throw new CustomError(error.message);

}

).then(

function fulfillPreInfuse(result) {

state.stepResults.push(result);

// Perform long running action, delegating async tasks passing callback and returning immediately.

return '10 second preinfuse now taking place.';

},

function rejectPreInfuse(error) {

// Deal with the error. Possibly augment some additional insight and re-throw.

if(state.errors[state.errors.length -1] !== error.message)

state.errors.push(error.message);

throw new CustomError(error.message);

}

).then(

function fulfillExtract(result) {

state.stepResults.push(result);

state.description = 'beautiful shot!';

state.stepResults.push('Cranking leaver down and extracting pure goodness.');

// Perform long running action, delegating async tasks passing callback and returning immediately.

return state;

},

function rejectExtract(error) {

// Deal with the error. Possibly augment some additional insight and re-throw.

if(state.errors[state.errors.length -1] !== error.message)

state.errors.push(error.message);

throw new CustomError(error.message);

}

).catch(CustomError, function (e) {

// Only deal with the error type that we know about.

// All other errors will propagate to the next catch. whenjs also has a finally if you need it.

// Todo: KimC. Do the dealing with e.

e.newCustomErrorInformation = 'Ok, so we have now dealt with the error in our custom error handler.';

return e;

}

).catch(function (e) {

// Handle other errors

e.newUnknownErrorInformation = 'Hmm, we have an unknown error.';

return e;

}

);

function brew() {

return promisedCoffee;

}

// when's promise.catch is only supposed to catch errors derived from the native Error (etc) functions.

// Although in my tests, my catch(CustomError func) wouldn't catch it. I'm assuming there's a bug as it kept giving me a TypeError instead.

// Looks like it came from within the library. So this was a little disappointing.

CustomError.prototype = Error;

module.exports = function promisedCoffee() {

return {

// Publicise brew.

brew: brew

};

};

Resources

Testing Asynchronous Code

All of the tests I demonstrated above have been integration tests. Usually I’d unit test the functions individually not worrying about the intrinsically asynchronous code, as most of it isn’t mine anyway, it’s C/O the EventEmitter, Async and other libraries and there is often no point in testing what the library maintainer already tests.

When you’re driving your development with tests, there should be little code testing the asynchronicity. Most of your code should be able to be tested synchronously. This is a big part of the reason why we drive our development with tests, to make sure your code is easy to test. Testing asynchronous code is a pain, so don’t do it much. Test your asynchronous code yes, but most of your business logic should be just functions that you join together asynchronously. When you’re unit testing, you should be testing units, not asynchronous code. When you’re concerned about testing your asynchronicity, that’s called integration testing. Which you should have a lot less of. I discuss the ratios here.

As of 1.18.0 Mocha now has baked in support for promises. For fluent style of testing promises we have Chai as Promised.

Resources

There are plenty of other resources around working with promises in JavaScript. For myself I found that I needed to actually work with them to solidify my understanding of them. With Chrome DevTool async option, we’ll soon have support for promise chaining.

Other Excellent Resources

And again all of the code samples can be found at GitHub.