Not to be confused with the GoF Prototype pattern that defines a lot more than the simple JavaScript prototype. Although the abstract concept of the prototype is the same.

My intention with this post is to arm our developers with enough information around JavaScript prototypes to know when they are the right tool for the job as opposed to other constructs when considering how to create polymorphic JavaScript that’s performant and easy to maintain. Often performant code and easy to maintain code are in conflict with each other. I.E. if you want code that’s fast, it’s often hard to read and if you want code that’s really easy to read, it “may” not be as fast as it could/should be. So we make trade-offs.

Make your code as readable as possible in as many places as possible. The more eyes that are going to be on it, generally the more readable it needs to be. Where performance really matters, we “may” have to carefully sacrifice some precious readability to achieve the essential performance required. This really needs measuring though, because often we think we’re writing fast code that either doesn’t matter or that just isn’t fast. So we should always favour readability, then profile your running application in an environment as close to production as possible. This removes the guess work, which we usually get wrong anyway. I’m currently working on a Node.js performance blog post in which I’ll attempt to address many things to do with performance. What I’m finding a lot of the time is that techniques that I’ve been told are essential for fast code are all to often incorrect. We must measure.

Some background

Before we do the deep dive thing, lets step back for a bit. Why do prototypes matter in JavaScript? What do prototypes do for us? Where do prototypes fit into the design philosophy of JavaScript?

What do JavaScript Prototypes do for us?

Removal of Code Duplication (DRY)

Excellent for reducing unnecessary duplication of members that will need garbage collecting

Performance

Prototypes also allow us to maximise economy of memory, thus reducing Garbage Collection (GC) activity, thus increasing performance. There are other ways to get this performance though. Prototypes which obtain re-use of the parent object are not always the best way to get the performance benefits we crave. You can see here under the “Cached Functions in the Module Pattern” section that using closure (although not mentioned) which is what modules leverage, also gives us the benefit of re-use, as the free variable in the outer scope is baked into the closure. Just check the jsperf for proof.

The Design Philosophy of JavaScript and Prototypes

Prototypal inheritance was implemented in JavaScript as a key technique to support the object oriented principle of polymorphism. Prototypal inheritance provides the flexibility of being able to choose what the more specific object is going to inherit, rather than in the classical paradigm where you’re forced to inherit all the base class’s baggage whether you want it or not.

Three obvious ways to achieve polymorphism:

- Composition (creating an object that composes a contract to another object)(has-a relationship). Learn the pros and cons. Use when it makes sense

- Prototypal inheritance (is-a relationship). Learn the pros and cons. Use when it makes sense

- Monkey Patching courtesy of call, apply and bind

- Classical inheritance (is-a relationship). Why would you? Please don’t try this

at homein production 😉

Of course there are other ways and some languages have unique techniques to achieve polymorphism. like templates in C++, generics in C#, first-class polymorphism in Haskell, multimethods in Clojure, etc, etc.

Diving into the Implementation Details

Before we dive into Prototypes…

What does Composition look like?

There are many great examples of how composing our objects from other object interfaces whether they’re owned by the composing object (composition), or aggregated from independent objects (aggregation), provide us with the building blocks to create complex objects to look and behave the way we want them to. This generally provides us with plenty of flexibility to swap implementation at will, thus overcoming the tight coupling of classical inheritance.

Many of the Gang of Four (GoF) design patterns we know and love leverage composition and/or aggregation to help create polymorphic objects. There is a difference between aggregation and composition, but both concepts are often used loosely to just mean creating objects that contain other objects. Composition implies ownership, aggregation doesn’t have to. With composition, when the owning object is destroyed, so are the objects that are contained within the owner. This is not necessarily the case for aggregation.

An example: Each coffee shop is composed of it’s own unique culture. Each coffee shop has a different type of culture that it fosters and the unique culture is an aggregation of its people and their attributes. Now the people that aggregate the specific coffee shop culture can also be a part of other cultures that are completely separate to the coffee shops culture, they could even leave the current culture without destroying it, but the culture of the specific coffee shop can not be the same culture of another coffee shop. Every coffee shops culture is unique, even if only slightly.

| Programmer | Show Pony |

|---|---|

|

|

Following we have a coffeeShop that composes a culture. We use the Strategy pattern within the culture to aggregate the customers. The Visit function provides an interface to encapsulate the Concrete Strategy, which is passed as an argument to the Visit constructor and closed over by the describe method.

// Context component of Strategy pattern.

var Programmer = function () {

this.casualVisit = {};

this.businessVisit = {};

// Add additional visit types.

};

// Context component of Strategy pattern.

var ShowPony = function () {

this.casualVisit = {};

this.businessVisit = {};

// Add additional visit types.

};

// Add more persons to make a unique culture.

var customer = {

setCasualVisitStrategy: function (casualVisit) {

this.casualVisit = casualVisit;

},

setBusinessVisitStrategy: function (businessVisit) {

this.businessVisit = businessVisit;

},

doCasualVisit: function () {

console.log(this.casualVisit.describe());

},

doBusinessVisit: function () {

console.log(this.businessVisit.describe());

}

};

// Strategy component of Strategy pattern.

var Visit = function (description) {

// description is closed over, so it's private. Check my last post on closures for more detail

this.describe = function () {

return description;

};

};

var coffeeShop;

Programmer.prototype = customer;

ShowPony.prototype = customer;

coffeeShop = (function () {

var culture = {};

var flavourOfCulture = '';

// Composes culture. The specific type of culture exists to this coffee shop alone.

var whatWeWantExposed = {

culture: {

looksLike: function () {

console.log(flavourOfCulture);

}

}

};

// Other properties ...

(function createCulture() {

var programmer = new Programmer();

var showPony = new ShowPony();

var i = 0;

var propertyName;

programmer.setCasualVisitStrategy(

// Concrete Strategy component of Strategy pattern.

new Visit('Programmer walks to coffee shop wearing jeans and T-shirt. Brings dog, Drinks macchiato.')

);

programmer.setBusinessVisitStrategy(

// Concrete Strategy component of Strategy pattern.

new Visit('Programmer brings software development team. Performs Sprint Planning. Drinks long macchiato.')

);

showPony.setCasualVisitStrategy(

// Concrete Strategy component of Strategy pattern.

new Visit('Show pony cycles to coffee shop in lycra pretending he\'s just done a hill ride. Struts past the ladies chatting them up. Orders Chai Latte.')

);

showPony.setBusinessVisitStrategy(

// Concrete Strategy component of Strategy pattern.

new Visit('Show pony meets business friends in suites. Pretends to work on his macbook pro. Drinks latte.')

);

culture.members = [programmer, showPony, /*lots more*/];

for (i = 0; i < culture.members.length; i++) {

for (propertyName in culture.members[i]) {

if (culture.members[i].hasOwnProperty(propertyName)) {

flavourOfCulture += culture.members[i][propertyName].describe() + '\n';

}

}

}

}());

return whatWeWantExposed;

}());

coffeeShop.culture.looksLike();

// Programmer walks to coffee shop wearing jeans and T-shirt. Brings dog, Drinks macchiato.

// Programmer brings software development team. Performs Sprint Planning. Drinks long macchiato.

// Show pony cycles to coffee shop in lycra pretending he's just done a hill ride. Struts past the ladies chatting them up. Orders Chai Latte.

// Show pony meets business friends in suites. Pretends to work on his macbook pro. Drinks latte.

Now for Prototype

EcmaScript 5

In ES5 we’re a bit spoilt as we have a selection of methods on Object that help with prototypal inheritance.

Object.create takes an argument that’s an object and an optional properties object which is a EcmaScript 5 property descriptor like the second parameter of Object.defineProperties and returns a new object with the first argument passed as it’s prototype and the properties described in the property descriptor (if present) added to the returned object.

|

// The object we use as the prototype for hobbit.

var person = {

personType: 'Unknown',

backingOccupation: 'Unknown occupation',

age: 'Unknown'

};

var hobbit = Object.create(person);

Object.defineProperties(person, {

'typeOfPerson': {

enumerable: true,

value: function () {

if(arguments.length === 0)

return this.personType;

else if(arguments.length === 1 && typeof arguments[0] === 'string')

this.personType = arguments[0];

else

throw 'Number of arguments not supported. Pass 0 arguments to get. Pass 1 string argument to set.';

}

},

'greeting': {

enumerable: true,

value: function () {

console.log('Hi, I\'m a ' + this.typeOfPerson() + ' type of person.');

}

},

'occupation': {

enumerable: true,

get: function () {return this.backingOccupation;},

// Would need to add some parameter checking on the setter.

set: function (value) {this.backingOccupation = value;}

}

});

// Add another property to hobbit.

hobbit.fatAndHairyFeet = 'Yes indeed!';

console.log(hobbit.fatAndHairyFeet); // 'Yes indeed!'

// prototype is unaffected

console.log(person.fatAndHairyFeet); // undefined

console.log(hobbit.typeOfPerson()); // 'Unknown '

hobbit.typeOfPerson('short and hairy');

console.log(hobbit.typeOfPerson()); // 'short and hairy'

console.log(person.typeOfPerson()); // 'Unknown'

hobbit.greeting(); // 'Hi, I'm a short and hairy type of person.'

person.greeting(); // 'Hi, I'm a Unknown type of person.'

console.log(hobbit.age); // 'Unknown'

hobbit.age = 'young';

console.log(hobbit.age); // 'young'

console.log(person.age); // 'Unknown'

console.log(hobbit.occupation); // 'Unknown occupation'

hobbit.occupation = 'mushroom hunter';

console.log(hobbit.occupation); // 'mushroom hunter'

console.log(person.occupation); // 'Unknown occupation'

Object.getPrototypeOf

console.log(Object.getPrototypeOf(hobbit));

// Returns the following:

// { personType: 'Unknown',

// backingOccupation: 'Unknown occupation',

// age: 'Unknown',

// typeOfPerson: [Function],

// greeting: [Function],

// occupation: [Getter/Setter] }

EcmaScript 3

One of the benefits of programming in ES 3, is that we have to do more work ourselves, thus we learn how some of the lower level language constructs actually work rather than just playing with syntactic sugar. Syntactic sugar is generally great for productivity, but I still think there is danger of running into problems when you don’t really understand what’s happening under the covers.

So lets check out what really goes on with….

Prototypal Inheritance

What is a Prototype?

All objects have a prototype, but not all objects reveal their prototype directly by a property called prototype. All prototypes are objects.

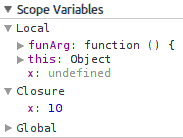

So, if all objects have a prototype and all prototypes are objects, we have an inheritance chain right? That’s right. See the debug image below.

All properties that you may want to add to an objects prototype are shared through inheritance by all objects sharing the prototype.

So, if all objects have a prototype, where is it stored? All objects in JavaScript have an internal property called [[Prototype]]. You won’t see this internal property. All prototypes are stored in this internal property. How this internal property is accessed is dependant on whether it’s object is an object (object literal or object returned from a constructor) or a function. I discuss how this works below. When you dereference an object in order to find a property, the engine will first look on the current object, then the prototype of the current object, then the prototype of the prototype object and so on up the prototype chain. It’s a good idea to try and keep your inheritance hierarchies as shallow as possible for performance reasons.

Prototypes in Functions

Every function object is created with a prototype property, whether it’s a constructor or not. The prototype property has a value which is a constructor property which has a value that’s actually the function. See the below example to help clear it up. ES3 and ES5 spec 13.2 say pretty much the same thing.

var MyConstructor = function () {};

console.log(MyConstructor.prototype.constructor === MyConstructor); // true

and to help with visualising, see the below example and debug. myObj and myObjLiteral are for the two code examples below the debug image.

var MyConstructor = function () {};

var myObj = new MyConstructor();

var myObjLiteral = {};

Up above in the composition example on line 40 and 41, you can see how we access the prototype of the constructor. We can also access the prototype of the object returned from the constructor like this:

var MyConstructor = function () {};

var myObj = new MyConstructor();

console.log(myObj.constructor.prototype === MyConstructor.prototype); // true

We can also do similar with an object literal. See below.

Prototypes in Objects that are Not Functions

Every object that is not a function is not created with a prototype property (All objects do have the hidden internal [[Prototype]] property though). Now sometimes you’ll see Object.prototype talked about. Even MDN make the matter a little confusing IMHO. In this case, the Object is the Object constructor function and as discussed above, all functions have the prototype property.

When we create object literals, the object we get is the same as if we ran the expression new Object(); (see ES3 and ES5 11.1.5)

So although we can access the prototype property of functions (that may or not be constructors), there is no such exposed prototype property directly on objects returned by constructors or on object literals.

There is however conveniently a constructor property directly on all objects returned by constructors and on object literals (as you can think of their construction procedure producing the same result). This looks similar to the above debug image:

var myObjLiteral = {};

// ES3 -> // ES5 ->

console.log(myObjLiteral.constructor.prototype === Object.getPrototypeOf(myObjLiteral)); // true

I’ve purposely avoided discussing the likes of __proto__ as it’s not defined in EcmaScript and there’s no valid reason to use something that’s not standard.

Polyfilling to ES5

Now to get a couple of terms used in web development well defined before we start talking about them:

- A shim is a library that brings a new API to an environment that doesn’t support it by using only what the older environment supports to support the new API.

- A polyfill is some code in the form of a function, module, plugin, etc that provides the functionality of a later environment (ES5 for example) if it doesn’t exist for an older environment (ES3 for example). The polyfill often acts as a fallback. The programmer writes code targeting the newer environment as though the older environment doesn’t exist, but when the code is pulled into the older environment the polyfill kicks into action as the new language feature isn’t yet implemented natively.

If you’re supporting older browsers that don’t have full support for ES5, you can still use the ES5 additions so long as you provide ES5 polyfills. es5-shim is a good choice for this. Checkout the html5please ECMAScript 5 section for a little more detail. Also checkout Kangax’s ECMAScript 5 compatibility table to see which browsers currently support which ES5 language features. A good approach and one I like to take is to use a custom build of a library such as Lo-Dash to provide a layer of abstraction so I don’t need to care whether it’ll be in an ES5 or ES3 environment. Then for anything that the abstraction library doesn’t provide I’ll use a customised polyfill library such as es5-shim to fall back on. I prefer to use Lo-Dash over Underscore too, as I think Lo-Dash is starting to leave Underscore behind in terms of performance and features. I also like to use the likes of yepnope.js to conditionally load my polyfills based on whether they’re actually needed in the users browser. As there’s no point in loading them if we have browser support now is there?

Polyfilling Object.create as discussed above, to ES5

You could use something like the following that doesn’t accommodate an object of property descriptors. Or just go with the following next two choices which is what I do:

- Use an abstraction like the lodash create method which takes an optional second argument object of properties and treats them the same way

- Use a polyfill like this one.

if (typeof Object.create !== 'function') {

(function () {

var F = function () {};

Object.create = function (proto) {

if (arguments.length > 1) {

throw Error('Second argument not supported');

}

if (proto === null) {

throw Error('Cannot set a null [[Prototype]]');

}

if (typeof proto !== 'object') {

throw TypeError('Argument must be an object');

}

F.prototype = proto;

return new F();

};

})();

};

Polyfilling Object.getPrototypeOf as discussed above, to ES5

- Use an abstraction like the lodash isPlainObject method (source here), or…

- Use a polyfill like this one. Just keep in mind the gotcha.

EcmaScript 6

I got a bit excited when I saw an earlier proposed prototype-for (also seen with the name prototype-of) operator: <| . Additional example here. This would have provided a terse syntax for providing an object literal with an object to use as its prototype. It looks like it must have lost traction though as it was removed in the June 15, 2012 Draft.

There are a few extra methods in ES6 that deal with prototypes, but on trawling the EcmaScript 6 draft spec, nothing at this stage that really stands out as revolutionising the way I write JavaScript or being a mental effort/time saver for me. Of course I may have missed something. I’d like to hear from anyone that has seen something interesting to the contrary?

Yes we’re getting class‘s in ES6, but they are just an abstraction giving us a terse and declarative mechanism for doing what we already do with functions that we use as constructors, prototypes and the objects (or instances if you will) that are returned from our functions that we’ve chosen to act as constructors.

Architectural Ideas that Prototypes Help With

This is a common example that I often use for domain objects that are fairly hot that use one set of accessor properties added to the business objects prototype, as you can see on line 13 of my Hobbit module (Hobbit.js) below.

First a quick look at the tests/spec to drive the development. This is being run using mocha with the help of a Makefile in the root directory of my module under test.

- Makefile

# The relevant section. unit-test: @NODE_ENV=test ./node_modules/.bin/mocha \ test/unit/*test.js test/unit/**/*test.js

- Hobbit-test.js

var requireFrom = require('requirefrom');

var assert = require('assert');

var should = require('should');

var shire = requireFrom('shire/');

// Hardcode $NODE_ENV=test for debugging.

process.env.NODE_ENV='test';

describe('shire/Hobbit business object unit suite', function () {

it('Should be able to instantiate a shire/Hobbit business object.', function (done) {

// Uncomment below lines if you want to debug.

//this.timeout(444000);

//setTimeout(done, 444000);

var Hobbit = shire('Hobbit');

var hobbit = new Hobbit();

// Properties should be declared but not initialised.

// No good checking for undefined alone, as that would be true whether it was declared or not.

hobbit.should.have.property('id');

(hobbit.id === undefined).should.be.true;

hobbit.should.have.property('typeOfPerson');

(hobbit.typeOfPerson === undefined).should.be.true;

hobbit.should.have.property('greeting');

(hobbit.greeting === undefined).should.be.true;

hobbit.should.have.property('occupation');

(hobbit.occupation === undefined).should.be.true;

hobbit.should.have.property('emailFrom');

(hobbit.emailFrom === undefined).should.be.true;

hobbit.should.have.property('name');

(hobbit.name === undefined).should.be.true;

done();

});

it('Should be able to set and get all properties of a shire/Hobbit business object.', function (done){

// Uncomment below lines if you want to debug.

this.timeout(444000);

setTimeout(done, 444000);

// Arrange

var Hobbit = shire('Hobbit');

var hobbit = new Hobbit();

// Act

hobbit.id = '32f4d01e-74dc-45e8-b3a8-9aa24840bc6a';

hobbit.typeOfPerson = 'short and hairy';

hobbit.greeting = {

intro: 'Hi, I\'m a ',

outro: ' type of person.'};

hobbit.occupation = 'mushroom hunter';

hobbit.emailFrom = 'Bilbo.Baggins@theshire.arn';

hobbit.name = 'Bilbo Baggins';

// Assert

hobbit.id.should.equal('32f4d01e-74dc-45e8-b3a8-9aa24840bc6a');

hobbit.typeOfPerson.should.equal('short and hairy');

hobbit.greeting.should.equal('Hi, I\'m a short and hairy type of person.');

hobbit.occupation.should.equal('mushroom hunter');

hobbit.emailFrom.should.equal('Bilbo.Baggins@theshire.arn');

hobbit.name.should.eql('Bilbo Baggins');

done();

});

});

- Now the business object itself Hobbit.js

–

Now what’s happening here is that on instance creation ofnew Hobbit, the emptymembersobject you see created on line 9 is the only instance data. All of theHobbit‘s accessor properties are defined once per export of theHobbitmodule which is assigned the constructor function object. So what we store on each instance are the values assigned in the Hobbit-test.js from lines 47 through 54. That’s just the strings. So very little space is used for each instance of theHobbitfunction returned by invoking theHobbitconstructor that theHobbitmodule exports.

// Could achieve a cleaner syntax with Object.create, but constructor functions are a little faster.

// As this will be hot code, it makes sense to favour performance in this case.

// Of course profiling may say it's not worth it, in which case this could be rewritten.

var Hobbit = (function () {

function Hobbit (/*Optionally Construct with DTO and serializer*/) {

// Todo: Implement pattern for enforcing new.

Object.defineProperty (this, 'members', {

value: {}

});

}

(function definePublicAccessors (){

Object.defineProperties(Hobbit.prototype, {

id: {

get: function () {return this.members.id;},

set: function (newValue) {

// Todo: Validation goes here.

this.members.id = newValue;

},

configurable: false, enumerable: true

},

typeOfPerson: {

get: function () {return this.members.typeOfPerson;},

set: function (newValue) {

// Todo: Validation goes here.

this.members.typeOfPerson = newValue;

},

configurable: false, enumerable: true

},

greeting: {

get: function () {

return this.members.greeting === undefined ?

undefined :

this.members.greeting.intro +

this.typeOfPerson +

this.members.greeting.outro;

},

set: function (newValue) {

// Todo: Validation goes here.

this.members.greeting = newValue;

},

configurable: false, enumerable: true

},

occupation: {

get: function () {return this.members.occupation;},

set: function (newValue) {

// Todo: Validation goes here.

this.members.occupation = newValue;

},

configurable: false, enumerable: true

},

emailFrom: {

get: function () {return this.members.emailFrom;},

set: function (newValue) {

// Todo: Validation goes here.

this.members.emailFrom = newValue;

},

configurable: false, enumerable: true

},

name: {

get: function () {return this.members.name;},

set: function (newValue) {

// Todo: Validation goes here.

this.members.name = newValue;

},

configurable: false, enumerable: true

}

});

})();

return Hobbit;

})();

// JSON.parse provides a hydrated hobbit from the DTO.

// So you would call this to populate this DO from a DTO

// JSON.stringify provides the DTO from a hydrated hobbit

module.exports = Hobbit;

- Now running the test

|

Flyweights using Prototypes

A couple of interesting examples of the Flyweight pattern implemented in JavaScript are by the GoF and Addy Osmani.

The GoF’s implementation of the FlyweightFactory makes extensive use of closure to store its flyweights and uses aggregation in order to create it’s ConcreteFlyweight from the Flyweight. It doesn’t use prototypes.

Addy Osmani has a free book “JavaScript Design Patterns” containing an example of the Flyweight pattern, which IMO is considerably simpler and more elegant. In saying that, the GoF want you to buy their product, so maybe they do a better job when you give them money. In this example closure is also used extensively, but it’s a good example of how to leverage prototypes to share your less specific behaviour.

Mixins using Prototypes

Again if you check out the last example of Mixins in Addy Osmani’s book, there is quite an elegant example.

We can even do multiple inheritance using mixins, by adding which ever properties we want from what ever objects we want to the target objects prototype.

This is a similar concept to the post I wrote on Monkey Patching.

Mixins support the Open/Closed principle, where objects should be able to have their behaviour modified without their source code being altered.

Keep in mind though, that you shouldn’t just expect all consumers to know you’ve added additional behaviour. So think this through before using.

Factory functions using Prototypes

Again a decent example of the Factory function pattern is implemented in the “JavaScript Design Patterns” book here.

There are many other areas you can get benefits from using prototypes in your code.

Prototypal Inheritance: Not Right for Every Job

Prototypes give us the power to share only the secrets of others that need to be shared. We have fine grained control. If you’re thinking of using inheritance be it classical or prototypal, ask yourself “Is the class/object I’m wanting to provide a parent for truly a more specific version of the proposed parent?”. This is the idea behind the Liskov Substitution Principle (LSP) and Design by Contract (DbC) which I posted on here. Don’t just inherit because it’s convenient In my “javascript object creation patterns” post I also discussed inheritance.

The general consensus is that composition should be favoured over inheritance. If it makes sense to compose once you’ve considered all options, then go for it, if not, look at inheritance. Why should composition be favoured over inheritance? Because when you compose your object from another contract of an object, your sub object (the object doing the composing) doesn’t inherit anything or need to know anything about the composed objects secrets. The object being composed has complete freedom as to how it minds it’s own business, so long as it provides a consistent contract for consumers. This gives us the much loved polymorphism we crave without the crazy tight coupling of classical inheritance (inherit everything, even your fathers drinking problem :-s).

I’m pretty much in agreement with this when we’re talking about classical inheritance. When it comes to prototypal inheritance, we have a lot more flexibility and control around how we use the object that we’re deriving from and exactly what we inherit from it. So we don’t suffer the same “all or nothing” buy in and tight coupling as we do with classical inheritance. We get to pick just the good parts from an object that we decide we want as our parent. The other thing to consider is the memory savings of inheriting from a prototype rather than achieving your polymorphic behaviour by way of composition, which has us creating the composed object each time we want another specific object.

So in JavaScript, we really are spoilt for choice when it comes to how we go about getting our fix of polymorphism.

When surveys are carried out on..

Why Software Projects Fail

the following are the most common causes:

- Ambiguous Requirements

- Poor Stakeholder Involvement

- Unrealistic Expectations

- Poor Management

- Poor Staffing (not enough of the right skills)

- Poor Teamwork

- Forever Changing Requirements

- Poor Leadership

- Cultural & Ethical Misalignment

- Inadequate Communication

You’ll notice that technical reasons are very low on the list of why projects fail. You can see the same point mentioned by many of our software greats, but when a project does fail due to technical reasons, it’s usually because the complexity got out of hand. So as developers when focusing on the art of creating good code, our primary concern should be to reduce complexity, thus enhance the ability to maintain the code going forward.

I think one of Edsger W. Dijkstra’s phrases sums it up nicely. “Simplicity is prerequisite for reliability”.

Stratification is a design principle that focuses on keeping the different layers in code autonomous, I.E. you should be able to work in one layer without having to go up or down adjacent layers in order to fully understand the current layer you’re working in. Its internals should be able to move independently of the adjacent layers without effecting them or being concerned that a change in it’s own implementation will affect other layers. Modules are an excellent design pattern used heavily to build medium to large JavaScript applications.

With composition, if your composing with contracts, this is exactly what you get.

References and interesting reads

- I also discuss the prototype linkage briefly in my post on JavaScript object creation patterns.

- Pitfalls of Constructor Functions

- Exemplar Patterns

- Exemplar theory

- Taking another look at classical inheritance

- Angus Croll on Prototypes

- Yehuda Kats Understanding Prototypes in JavaScript